NumericalMethodsFor Engineers

Sign up for access to the world's latest research

Abstract

AI

AI

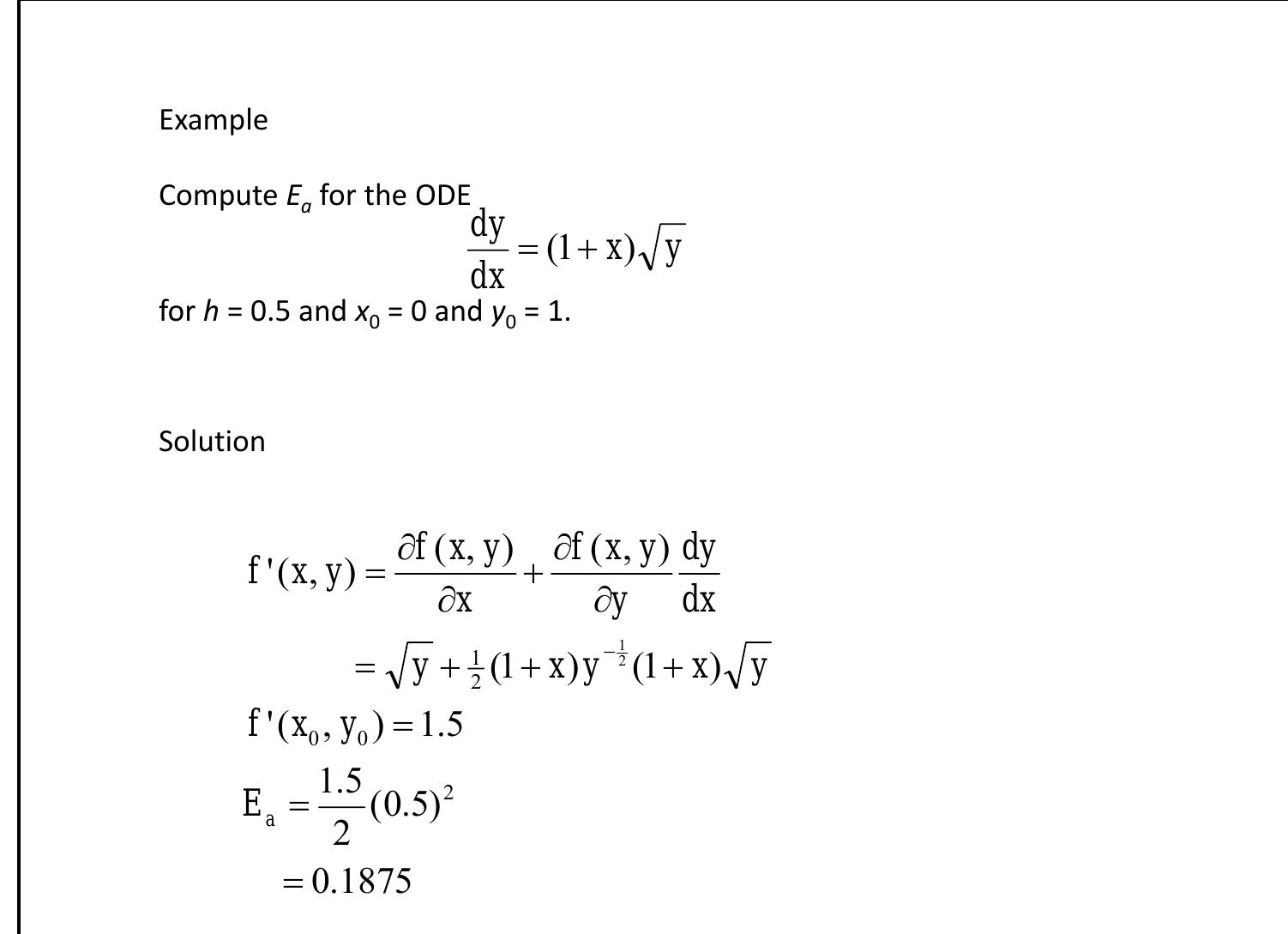

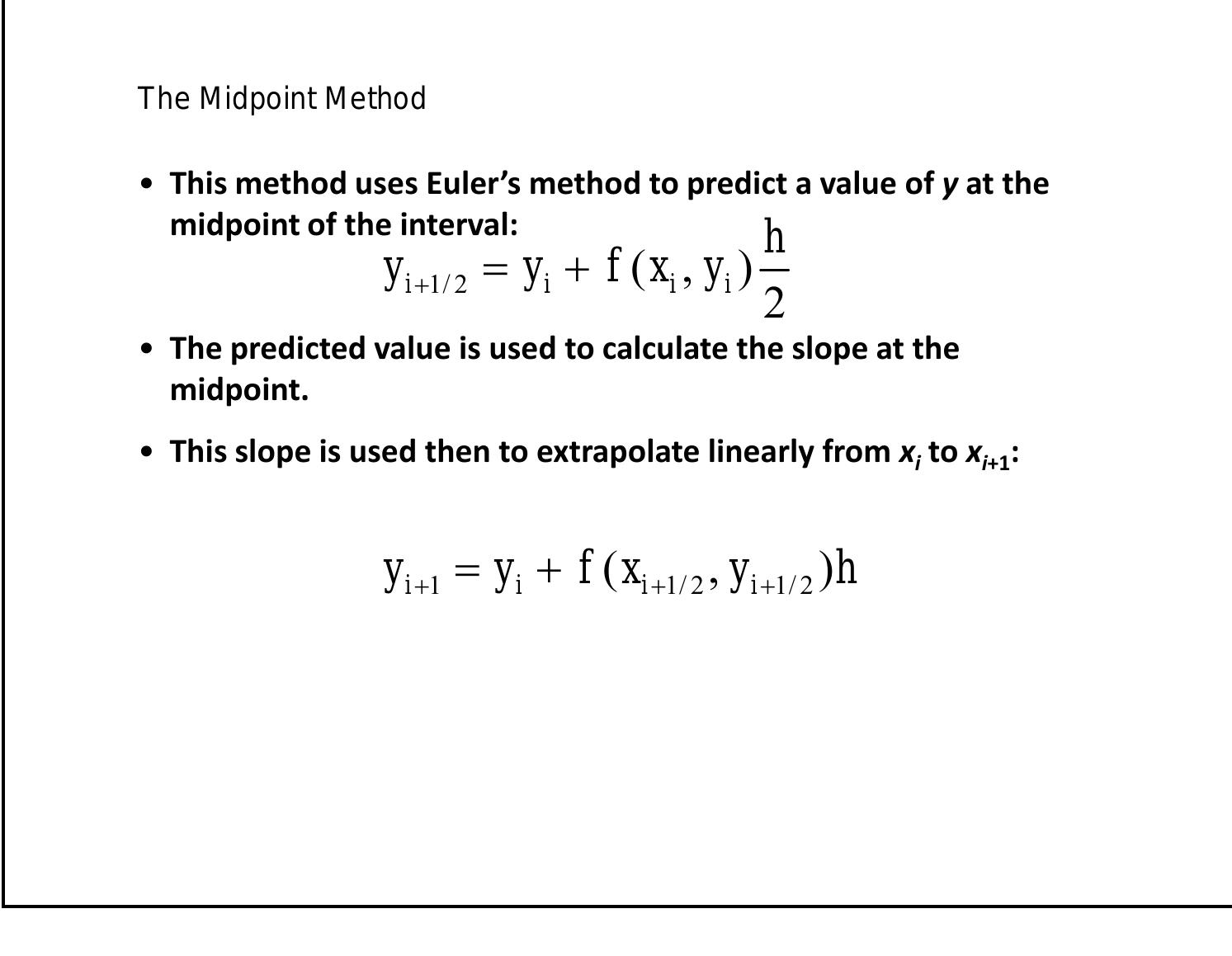

Numerical methods are essential for addressing complex mathematical and engineering problems that may not have exact analytical solutions. This document compares analytical and numerical approaches, highlighting the necessity of numerical methods in situations where true values are elusive. Emphasis is placed on techniques such as iterative approaches for error normalization and specific methods like the predictor-corrector method for numerical integration. Various numerical techniques are introduced, demonstrating their application through practical examples.

Related papers

International Journal for Research in Applied Science and Engineering Technology -IJRASET, 2020

The objective of this review paper is to review the concept of errors and their computation including different types of errors such as absolute error, relative error, random error, percentage error, etc. Errors play an important role in measurements or any calculations. Any measurement that we make is just an approximation, 100% accuracy is not possible. if we measure the same thing two times, there will be some variation between their values and this variation introduces an unwanted but unavoidable uncertainty in our measurements. so, here we discuss a new approach of error calculation & how to overcome from drawback of errors. Keywords: Systematic error, gross error , random error, absolute error, relative error, percentage error etc. I. INTRODUCTION Error may be classified as the difference between the measured value and the actual value. When we are measuring the values of an unknown quantity using the measuring instrument the value which we are getting is called the measured value. This measured value is different from the actual value of unknown quantity. For example, if we have done any practical measurements two times, then we get two different results both the time, there must be small error or sometimes large error between these two values. Sometimes the measured values are the same but it is an exception, the results don't need to be the same at both the time. After the measurement, the difference that occurs between the measured value and the actual value is termed as error. It is just one example but there are several examples regarding error. Errors may occur in any calculations such as mathematical, digital, technical, measurement, computational, etc. In general words, we can say that errors occur everywhere in every sector. Let's take an example, as we all know computers are one of the advanced result of our advanced technology, but even several errors occur in computational calculations. While performing experiments with industrial instruments, it is very important to operate these instruments carefully for reducing the presence of errors. Some errors are constant while some are random. We can't predict where errors occur, it occurs anywhere. Evolution of accuracy measures are used in major comparative studies of forecasting method. There are some early and most popular accuracy measures such as ROOT MEAN SQUARE ERROR (RMSE) and MEAN ABSOLUTE PERCENTAGE ERROR(MAPE). These methods are widely used for well-known issues such as higher sensitivity to outliers. When we use these accuracy measures, there must be some errors but these errors are small and seems to be good. Errors occur due to RMSE is 0.1% while errors occur due to MAPE is 1 %. A similar case can be found regarding MAPE with an error of 1.3% which seems to be good, but the error of 1.3% is larger than the average error. When we compare MAPE error concerning average error/fluctuation of stock price, MAPE error is larger than error occurred in stock price. Here poor interpretation occurs mainly due to lack of comparable benchmark used by the accuracy measures during any measurements or calculations.

International Journal for Research in Applied Science and Engineering Technology -IJRASET, 2020

The objective of this review paper is to review the concept of errors and their computation including different types of errors such as absolute error, relative error, random error, percentage error, etc. Errors play an important role in measurements or any calculations. Any measurement that we make is just an approximation, 100% accuracy is not possible. if we measure the same thing two times, there will be some variation between their values and this variation introduces an unwanted but unavoidable uncertainty in our measurements. so, here we discuss a new approach of error calculation & how to overcome from drawback of errors. Keywords: Systematic error, gross error , random error, absolute error, relative error, percentage error etc. I. INTRODUCTION Error may be classified as the difference between the measured value and the actual value. When we are measuring the values of an unknown quantity using the measuring instrument the value which we are getting is called the measured value. This measured value is different from the actual value of unknown quantity. For example, if we have done any practical measurements two times, then we get two different results both the time, there must be small error or sometimes large error between these two values. Sometimes the measured values are the same but it is an exception, the results don't need to be the same at both the time. After the measurement, the difference that occurs between the measured value and the actual value is termed as error. It is just one example but there are several examples regarding error. Errors may occur in any calculations such as mathematical, digital, technical, measurement, computational, etc. In general words, we can say that errors occur everywhere in every sector. Let's take an example, as we all know computers are one of the advanced result of our advanced technology, but even several errors occur in computational calculations. While performing experiments with industrial instruments, it is very important to operate these instruments carefully for reducing the presence of errors. Some errors are constant while some are random. We can't predict where errors occur, it occurs anywhere. Evolution of accuracy measures are used in major comparative studies of forecasting method. There are some early and most popular accuracy measures such as ROOT MEAN SQUARE ERROR (RMSE) and MEAN ABSOLUTE PERCENTAGE ERROR(MAPE). These methods are widely used for well-known issues such as higher sensitivity to outliers. When we use these accuracy measures, there must be some errors but these errors are small and seems to be good. Errors occur due to RMSE is 0.1% while errors occur due to MAPE is 1 %. A similar case can be found regarding MAPE with an error of 1.3% which seems to be good, but the error of 1.3% is larger than the average error. When we compare MAPE error concerning average error/fluctuation of stock price, MAPE error is larger than error occurred in stock price. Here poor interpretation occurs mainly due to lack of comparable benchmark used by the accuracy measures during any measurements or calculations.

ISTJ -30/1/2024

Computer operations rely heavily on numerical methods to ensure accurate and efficient results. However, despite the advancements in technology, errors may still arise due to varying factors such as hardware malfunction, software bugs, and human errors in data input. Thus, it is essential to estimate and analyze the potential errors in computer operations to maintain the integrity and reliability of the results. This paper presents an approach to estimate an error in computer operations based on numerical methods.

This section is devoted to introducing review of general information concerned with the quantification of errors such as the concept of significant digits is reviewed. Significant digits: Are important in showing the truth one has in a reported number. For example, if someone asked me what the population of my county is, I would simply respond, "The population of Adama area is 1 million!" Is it exactly one million? "I don"t know! But I am quite sure that it will not be two". The problem comes when someone else was going to give me a $100 for every citizen of the county, I would have to get an exact count in that case. That count would have been 1,079,587 in this year. So you can see that in my statement that the population is 1 million, that there is only one significant digit. i.e, 1, and in the statement that the population is 1,079,587, there are seven significant digits. That means I am quite sure about the accuracy of the number up to the seventh digit. So, how do we differentiate the number of digits correct in 1,000,000 and 1,079,587? Well for that, one may use scientific notation. For our data we can have to signify the correct number of significant digits.

AIChE Journal, 2006

in Wiley InterScience (www.interscience.wiley.com). A connection between precision and downside expected lost revenue for linear systems was developed in previous work. Having the value of precision and accuracy in economic terms helps justify the upgrade of instrumentation and/or the increase in corrective maintenance repair rate. The connection between accuracy and economic value is extended in this article to the case where biases are present and in the context of existing corrective maintenance capabilities.

Himalayan Physics, 2013

The process of evaluating uncertainty associated with a measurement result is often called uncertainty analysis or error analysis. Without proper error analysis, no valid scientific conclusions can be drawn. The uncertainty of a single measurement is limited by the precision and accuracy of the measurement. The errors are communicated in different mathematical operations. Every deviation in result of experiment from the expected one has important significance. Even on undergraduate level of our domestic universities, error analysis and interpretation of the result should be done instead of writing just the points of precautions. This approach will make the students somewhat familiar about the error analysis on advanced level. Otherwise, the hesitation and difficulty about error analysis which stuns M.Sc. level beginner student will persist and the necessity of standardization of our lab reports will remain untouched for next several years also.The Himalayan PhysicsVol. 3, No. 3, Jul...

Numerische Mathematik, 1986

Consciousness and Cognition, 2019

Recent studies have shown that participants can keep track of the magnitude and direction of their errors while reproducing target intervals (Akdoğan & Balcı, 2017) and producing numerosities with sequentially presented auditory stimuli (Duyan & Balcı, 2018). Although the latter work demonstrated that error judgments were driven by the number rather than the total duration of sequential stimulus presentations, the number and duration of stimuli are inevitably correlated in sequential presentations. This correlation empirically limits the purity of the characterization of "numerical error monitoring". The current work expanded the scope of numerical error monitoring as a form of "metric error monitoring" to numerical estimation based on simultaneously presented array of stimuli to control for temporal correlates. Our results show that numerical error monitoring ability applies to magnitude estimation in these more controlled experimental scenarios underlining its ubiquitous nature. Recently, a number of studies provided evidence for a metric error monitoring mechanism in magnitude representations (eg.

Related topics

Related papers

Trends Journal of Sciences Research

The paper presented the results of the research related to the analysis of the reliability of computer calculations. Relevant examples of incorrect program operation were demonstrated: both quite simple and much less obvious, such as S. Rump's example. In addition to mathematical explanations, authors focused on purely software capabilities for controlling the accuracy of complex calculations. For this purpose, examples of effective use of the functionality of the decimal and fraction modules in Python 3.x were given.

International Journal of Scientific & Technology Research, 2015

From decades, the work of symbolic computations cannot be ignored in real time calculations. During the discussion of various automated machines, for estimated calculations we came to know where there are inputs and the corresponding outputs the term error is obvious. But the error can be minimized by using different suitable algorithms. This study focusses on techniques used for error correction in numeric and symbolic computations. After reviewing on different techniques discussed before we generate analysis by taking some of the parameters. The Experimental results shows that these algorithm has better performance in terms of accuracy, performance, cost, validity, safety, security, reliability and power consumption.

Journal of Complexity, 1986

The error of a numerical method may be much smaller for most instances than for the worst case. Also, two numerical methods may have the same maximal error although one of them usually is much better than the other. Such statements can be made precise by concepts from average case analysis. We give some examples where such an average case analysis seems to be more sensible than a worst case analysis.

Constitutional Challenges in the Algorithmic Society

Our lives are increasingly inhabited by technological tools that help us with delivering our workload, connecting with our families and relatives, as well as enjoying leisure activities. Credit cards, smartphones, trains, and so on are all tools that we use every day without noticing that each of them may work only through their internal 'code'. Those objects embed software programmes, and each software is based on a set of algorithms. Thus we may affirm that most of (if not all) our experiences are filtered by algorithms each time we use such 'coded objects'. 1 15.1.1 A Preliminary Distinction: Algorithms and Soft Computing According to computer science, algorithms are automated decision-making processes to be followed in calculations or other problem-solving operations, especially by a computer. 2 Thus an algorithm is a detailed and numerically finite series of instructions which can be processed through a combination of software and hardware tools: Algorithms start from an initial input and reach a prescribed output, which is based on the subsequent set of commands that can involve several activities, such as calculation, data processing, and automated reasoning. The achievement of the solution depends upon the correct execution of the instructions. 3 However, it is The contribution is based on the analysis developed within a DG Justice supported project eNACT (GA no. 763875). The responsibility for errors and omissions remains with the author.

Applied Mathematical Modelling, 2002

Computational fluid dynamics (CFD) computer codes have become an integral part of the analysis and scientific investigation of complex, engineering flow systems. Unfortunately, inherent in the solutions from simulations performed with these computer codes is error or uncertainty in the results. The issue of numerical uncertainty addresses the development of methods to define the magnitude of error or to bound the error in a given simulation. This paper reviews the status of methods for evaluation of numerical uncertainty, and provides a direction for the effective use of some techniques in estimating uncertainty in a simulation.

Since the beginning of the history of modern measurement science, the experimenters faced the problem of dealing with systematic effects, as distinct from, and opposed to, random effects. Two main schools of thinking stemmed from the empirical and theoretical exploration of the problem, one dictating that the two species should be kept and reported separately, the other indicating ways to combine the two species into a single numerical value for the total uncertainty (often indicated as 'error'). The second way of thinking was adopted by the GUM, and, generally, adopts the method of assuming that their expected value is null by requiring, for all systematic effects taken into account in the model, that corresponding 'corrections' are applied to the measured values before the uncertainty analysis is performed. On the other hand, about the value of the measurand intended to be the object of measurement, classical statistics calls it 'true value', admitting that a value should exist objectively (e.g. the value of a fundamental constant), and that any experimental operation aims at obtaining an ideally exact measure of it. However, due to the uncertainty affecting every measurement process, this goal can be attained only approximately, in the sense that nobody can ever know exactly how much any measured value differs from the true value. The paper discusses the credibility of the numerical value attributed to an estimated correction, compared with the credibility of the estimate of the location of the true value, concluding that the true value of a correction should be considered as imprecisely evaluable as the true value of any 'input quantity', and of the measurand itself. From this conclusion, one should derive that the distinction between 'input quantities' and 'corrections' is not justified and not useful.

A scrutiny of the contributions of key mathematicians and scientists shows that there has been much controversy (throughout the development of mathematics and science) concerning the use of mathematics and the nature of mathematics too. In this work, we try to show that arithmetical operations of approximation lead to the existence of a numerical uncertainty, which is quantic, path dependent and also dependent on the number system used, with mathematical and physical implications. When we explore the algebraic equations for the fine structure constant, the conditions exposed in this work generate paradoxical physical conditions, where the solution to the paradox may be in the fact that the fine-structure constant is calculated through different ways in order to obtain the same value, but there is no relationship between the fundamental physical processes which underlie the calculations, since we are merely dealing with algebraic relations, despite the expressions having the same physical dimensions.

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

Hasan S Abd-Alqader

Hasan S Abd-Alqader